---------------

Crisis Management: Combating the Denial Syndrome

By David Davies MIRM

No matter how many times it occurs, it is always cause for surprise when a major and, often, respected organisation makes serious errors in the way that it handles a major crisis. Their errors have been so serious that in most cases they have severely damaged their reputation and in some instances have caused loss of life or severe economic damage at a national level.

Examples that spring immediately to mind include product contamination and product safety (Perrier, Coca Cola Belgium, Mercedes “Moose test”) serious contract over-run (QE2 refit) loss of life (NASA mission failures caused by O ring and foam damage), Government reaction to epidemics (UK BSE and foot and mouth, China’s initial response to the SARS virus) and major scandal (Andersen, paedophile priests). These are just a random handful of examples – with little difficulty this list could be expanded to fill several pages.

It gets interesting when we move the camera in more closely and look at the performance of the people entrusted to manage the crisis. In all of these cases, and hundreds more, the pattern of errors is very similar - and yet the circumstances, the type of organisation and the countries involved are all so different. What we are observing arises from the dynamics and pressures that are unique to crises. So what do we know about this strange environment, and why is it so different to the "normal" world in which the same people presumably perform with less catastrophic consequences?

We should start by defining a crisis. For the purposes of this study, a crisis is an unplanned (but not necessarily unexpected) event that calls for real time high level strategic decisions in circumstances where making the wrong decisions, or not responding quickly or proactively enough, could seriously harm the organisation. Because of the type of issues involved and the penalties of failure, the crisis response group, typically assembled ad hoc, usually includes board members plus the relevant technical specialists. A catastrophe that triggers a technical response, such as an IT resumption plan or a business continuity plan, is not a crisis. A crisis, within this context, is characterised by:

- The need to make major strategic decisions based on incomplete and/or unreliable information

- Massive time pressures

- Often, intense interest by the media, analysts, regulators and others

- Often, allegations by the media and others that have to be countered

- Usually, handling a crisis with the characteristics noted here is completely outside the experience of those involved.

This article describes the psychological processes that affect crisis teams, and describes the search for a way of combating those processes. Had the techniques outlined (Dynamic crisis management) become universal a decade ago, companies would have avoided the loss of millions of pounds in share and brand value, the UK would have avoided billions of pounds worth of damage to its economy and many lives would have been saved.

These characteristics were present in the examples noted above.

In addition, there are two other characteristics that are common to the way in which crises are handled. The first, known as Group Think, was first linked to crisis handling in the enquiry into the NASA O ring disaster. The engineers in the decision making group were pressured by the commercial majority into agreeing to a launch that they felt could be unsafe. Group think has been defined as “The tendency for members of a cohesive group to reach decisions without weighing all the facts, especially those contradicting the majority opinion”

The circumstances necessary for Group think are:

- A cohesive group.

- Isolation of the group from outside influences.

- No systematic procedures for considering pros & cons of different courses of action.

- A directive leader who explicitly favors a particular course of action.

- High stress.

The unfortunate consequences of group think, present in the handling of so many crises, are:

- Incomplete survey of the group's objectives and alternative courses of action.

- Not examining the risks of the preferred choice.

- Poor/incomplete search for relevant information.

- Selective bias in processing information at hand.

- Not reappraising rejected alternatives.

- Not developing contingency plans for the failure of actions agreed by the group.

When we think about it, we find that these outcomes are, indeed, common to the way in which many crises are handled. The only factor that is difficult to judge from the outside is the leadership question. Was there strong leadership with a need to get consensus that the crisis was much nearer to the best case scenario than to the worse and, therefore, that a costly recall, mission abort, admission of high level fraud or unethical practices was unnecessary? It is only because of public enquiries in a small number of cases that the dynamics within a crisis group have been revealed at all.

This takes us to the central feature of most crises: that at the outset, very little information is available, and even that may be unreliable. To quote one crisis team member, “We started with very few facts – and most of those turned out to be wrong”. With most crises, there are a few fundamental questions to which answers are essential if the crisis is to be handled – but which are invariably unaddressed in the knee-jerk reactions of a traumatised crisis team:

- Cause - What is the cause of the crisis? - Often, even this basic factor is unknown. Is the contamination deliberate or accidental? Is it the work of an extortionist? Are the allegations against us true, exaggerated or totally false?

- Breadth - How widespread could it be? - One batch or the global product? One farm, a county or the entire country?

- Repeatability - Could it happen again? - Is it an isolated incident or, it is deliberate and therefore vulnerable to repetition? Is there a design or systemic defect? Are we being targeted? Could it trigger a string of copycat attacks or hoaxes?

- Blame - Are we/will we be seen to be at fault, and if so how "offensive" or blameworthy will our actions be seen to have been? Are our stakeholder relationships and reputational capital strong enough for us to be forgiven?

Despite initially having no reliable answers to these questions, action has to be taken, the media may be demanding answers (and making their own allegations) and there simply is not the luxury of waiting until all becomes clear – which it may never be unless the key information gaps are identified and targeted.

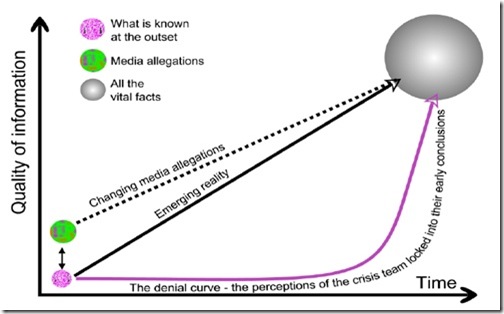

It is at this point that the denial curve often takes over.

The Denial Curve

In crisis after crisis, the common denominator is that the team handling the crisis:

- Initially plays it down. There is no evidence that the four key questions above have ever been systematically addressed, but in probably a far less structured way the best case answer to each question would have been assumed, even if not actually expressed. At this stage the crisis handlers are merely interpreting the meagre information that they have - but they are doing it with the strong influence of trauma-denial and often with the help of group think.

- Ignores, or at least heavily discounts, any new incoming information that contradicts the optimistic view that they first formed. By now the team will be communicating their optimism to the media, even presenting alternative “no fault” or “not widespread” theories before they have been substantiated – not bad maintenance but vandalism (Jarvis/Hatfield rail disaster); not contamination of the source but cleaning fluid spilt by a cleaner into just one production line (Perrier); no design fault but a fluke (Mercedes “moose test”)

- Finally, when the wealth of evidence is overwhelming, the crisis group swings into line in one of three ways:

- By underplaying the significance of the change of direction (Perrier)

- Quietly changes (most common)

- Grossly over-compensates (foot and mouth, BSE). It may not be a coincidence that over-compensation seems to take place most often when the (Government) organisation concerned will not suffer financially for the over-compensation.

The denial curve can thus be seen as follows: (chart 1)

Denial is the first stage of the grieving process, a process triggered by any traumatic event. (The other stages are anger, bargaining, depression and acceptance. Anger also features in some of the ways in which crises are badly handled – anger with the media, the regulators, and even the customers or consumers – the very groups of people with whom impeccable relations are needed during a crisis.) In the words of a consultant who helped one organisation recover from crisis, “For several days the senior management was incapable of making cogent decisions because of the shock of seeing their colleagues killed or maimed and their business destroyed”. Whilst a reputation-threatening crisis may not be as traumatic as one that is accompanied by loss of life, trauma will still be present, and with it denial and, in the early stages, the inability to act.

The reputation damage from underplaying the crisis and avoiding responsibility in the early stages can be considerable. The organisation appears to be arrogant, uncaring, and unsympathetic. Where there is loss of life relatives with their own emotional and psychological needs are given a bureaucratic response. They want sympathy, facts, expressions of regret and apologies and often get a buttoned up lawyer reading out a cold denying press statement. (Coca Cola Belgium.) Where denial delays or minimises remedial action, the tragic consequences can be loss of life (both NASA mission failures) or massive costs. (In the enquiry into the UK foot and mouth crisis, one scientist estimated that the unwarranted 3 day delay in introducing a national ban resulted in the scale of the disease being between two and three times as great. The crisis cost the UK economy £8bn.)

Combating the denial curve

So, how to avoid these unfortunate, very human, traits? No amount of crisis management planning will help here - indeed, the author has observed how, in crisis simulations where realistic pressure is applied, crisis plans are often ignored in the heat of the moment. Crisis management plans can be very valuable but they do not address the human reaction to the type of crisis that we are considering. Three things do, however, make a significant difference:

- Crisis experience. Experience of handling a "full blown" crisis can be invaluable. As such things are comparatively rare a simulated crisis can provide just that experience with none of the consequences of the real thing.

- Choice of leader. One of the salutary lessons of 9/11 is that some splendid leaders in a "normal" situation lost their leadership qualities in the trauma of the event whereas others not identified as natural leaders coped admirably. In the absence of a genuine crisis, only a crisis simulation will identify the best crisis leaders. It is also important that the leader is able to take a holistic view, embracing reputation, corporate values and stakeholder imperatives, rather than the more blinkered view that might possibly be imposed by, for example, a finance director or a corporate lawyer.

- A process that imposes a structured reappraisal of the key questions (those outlined above, varied according to the nature of the crisis) each time new key information is received. Of course, such an approach will only succeed if the crisis group is encouraged, cajoled, or led by example to seek the unbiased decisions, and not allowed or encouraged to perpetuate their belief in a cause-severity-blame scenario that is commercially desirable but less than likely.

All of this begs the question of what, in addition to crisis simulations, can be done to prepare. Crisis management plans are very useful to take care of the logistics of a crisis, but;

- very few organisations have crisis management plans of any quality (as opposed to business continuity plans which are more common though still lamentably rare)

- crisis management plans tend to concentrate on crisis logistics as opposed to the psychological behaviour of the crisis team.

Creating a response to the denial syndrome

In the author’s view, what was needed was a new type of process – one which would:

- Apply particularly those types of crisis where the key facts surrounding the crisis are unknown at the outset (the majority)

- Supplement and compliment a crisis management plan and, if there is none, make a significant contribution in its absence to the performance of the crisis management team, particularly in overcoming the denial curve and group think

- Be capable of being run even with no pre-planning, training or preparation – not a desirable situation but, regrettably, a realistic one.

Where existing plans were in place or were being developed, the new process, Dynamic Crisis Management, would supplement and compliment existing crisis and business continuity plans to form a modular approach:

Initially the process was developed as a paper-based process – a collection of forms and instructions - so that it could be run without a computer. This initial version had the capability of:

- Supporting a brainstorm that would establish or fine-tune the scenario alternatives for the four key questions outlined above (cause, breadth, repeatability, blame)

- Providing a process for readdressing and monitoring the likelihood of the scenarios within the four key questions each time new critical information was received

- Track the allegations of the media, grouped if necessary (for example, the national quality press, international press, national tabloids) and the opinions of one or more stakeholder groups (customers, employees, shareholders…) and to display these on a series of time lines so that the movement in opinion could be tracked, extrapolated and pre-empted.

Real life testing

In this format, it proved itself in two real crisis situations:

The first time it was used, there was only one certain fact: that there had been a fatality at the organisation’s premises. There was an unsubstantiated allegation that the deceased was a senior board member of a major client of the company in question. The death resulted from a tragic accident at a client-owned test facility and it was very possible that one or a combination of the organisation’s procedures, processes, employees or product would be found to be responsible. No more information was forthcoming, the police had sealed off the area and the media were pressing for news. There was no crisis plan and the organisation had no experience, actual or simulated, of managing a crisis. The process assisted the crisis group to firstly brainstorm the range of possible causes and the range of possibilities within the three severity measures listed above (breadth, repeatability and fault). It helped them to continually keep those under review as new information emerged, and to adjust their response accordingly. It also helped them to monitor and track the direction of the media’s questions and allegations. It is easy to see how, in the absence of so much information, group think and denial could have caused serious mistakes but, by using the reappraisal process to force the crisis team to look objectively at each emerging fact and respond accordingly, the crisis was handled effectively.

The second time was a food contamination incident; the author had run a simulated crisis and provided training on the use of the structured reappraisal process and shortly thereafter the company in question faced a real crisis. They had not had the time to complete their crisis management plans but the experience of the simulation and the use of the reappraisal processes enabled them to handle the crisis without the mistakes of either group think or the denial curve.

Possibly the best judgment is that although there was press interest in both incidents, both were handled quietly and competently, neither hit the headlines, and there was no impact on either their share price or their financial results.

Other vital crisis needs

It was however evident from the author’s studies of the way in which crises are handled that in addition to the denial curve and group think, there was a second problem – that a process was needed to impose a structure on record keeping during the crisis, and to do this as unobtrusively and transparently as possible. The need for this was clear and had been highlighted in, for example, the official enquiry into the 2001 UK foot and mouth crisis. This criticised poor record keeping and confused accounts of events which had resulted in crucial information not being obtained. “"While some policy decisions were recorded with commendable clarity, some of the most important ones taken during the outbreak were recorded in the most perfunctory way, and sometimes not at all.”1 The author’s own observations of even the trauma of simulated crises are that basic management skills such as delegation and orderly record keeping can quickly disappear. Speculative and completely reliable information tends to get confused. The reasons for decisions and, even more important, the assumptions on which they are based and the actions that they trigger go unrecorded.

It was decided therefore to convert the paper process into a computerised version so that automated record keeping could be incorporated. Therefore the writing of the software version of the process majored on not only recording information in a structured way (for example, by having a confidence rating for each item of information and an importance rating for every action) but in having a clear way of recalling, sorting and searching all of the information, unknown information, decisions, assumptions and, most importantly, the links between them.

Pressurised teams lose the ability to view the complete picture, instead focussing only on what is most pressing. The ability to pull the camera back into a long time sequence or to undertake a structured interrogation of all of the facts and issues can be invaluable.

However, the key success factor is the behaviour of the crisis team leader. There is a great deal to be said for using an experienced external facilitator who is well versed in the process. That provides the advantages of specific facilitation skills as well as being distanced from both the emotions of the event and the hidden commercial and personal objectives that are so often imposed on the rest of the team.

1 Anderson report, 7/02

Whether imposed by the team leader or by a process, the desirability of openness and realism at the expense of denial will depend upon the extent to which the crisis team accepts that doing otherwise will, in the long run, cause significant harm to corporate and personal reputation. Of that there can be little doubt, as the list of casualties – organisations that, consciously or unconsciously, tried the denial route – so clearly demonstrates.

© David Davies, 6/03

David Davies is Managing Director of Davies Business Risk Consulting Ltd. He also heads the reputation risk and crisis management stream for idRisk, a network of specialised, independent risk advisors who are regarded as experts in their respective fields and who can provide customers with comprehensive, high quality unbiased advice on, and solutions to, all aspects of risk a company may encounter.

Tiada ulasan:

Catat Ulasan